I worked on a project with Jonathan Palucci exploring the trainability of a certain simple kind of tensor network, called the Tensor Trains, or Matrix Product States.

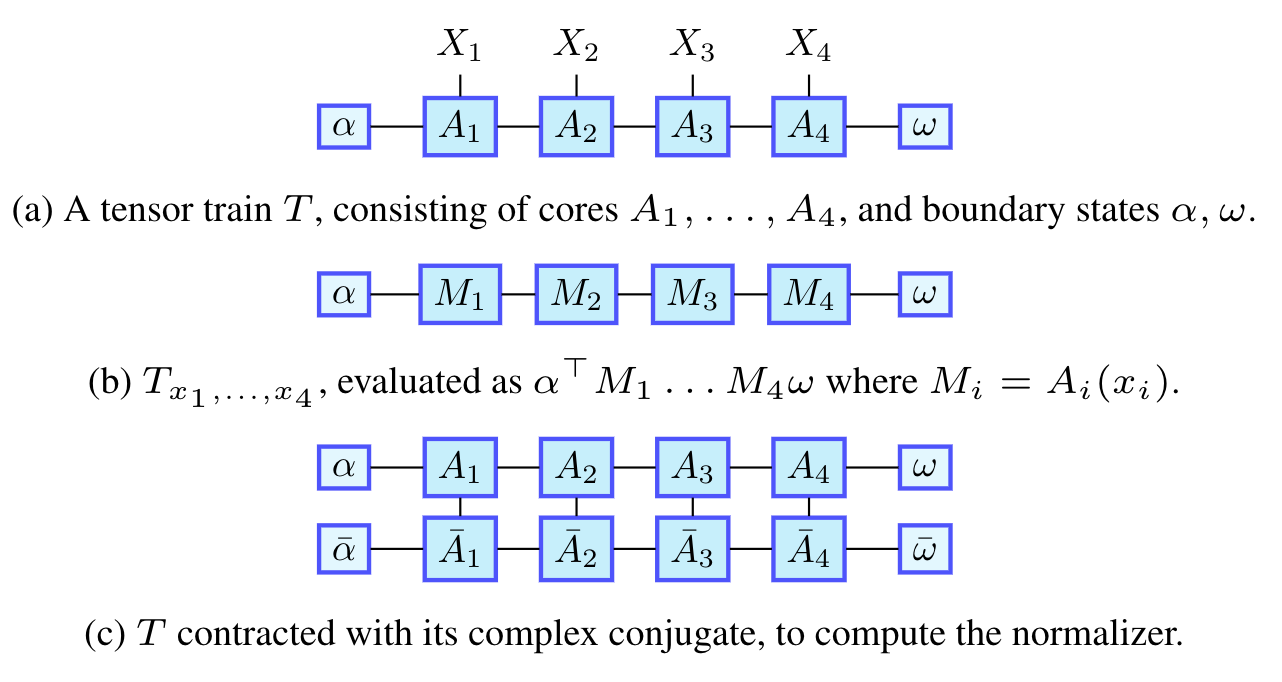

There is a general correspondence between tensor networks and graphical models, and in particular, when restricted to non-negative valued parameters, Matrix Product States are equivalent to Hidden Markov Models (HMMs)). Glasser et al. 2019 discussed this correspondence, and proved theoretical results about these non-negative models, as well as similar real– and complex–valued tensor trains. They supplemented their theoretical results with evidence from numerical experiments. In this project, we re-implemented models from their paper, and also implemented time-homogeneous versions of their models. We replicated some of their results for non-homogeneous models, adding a comparison with homogeneous models on the same data. We found evidence that homogeneity decreases ability of the models to fit non-sequential data, but preliminarily observed that on sequential data (for which the assumption of homogeneity is justified), homogeneous models achieved an equally good fit with far fewer parameters. Surprisingly, we also found that the more powerful non time-homogeneous positive MPS performs identically to a time homogeneous HMM.

📊 Poster –> here (PDF).

📄 Writeup titled A practical comparison of tensor train models: The effect of homogeneity –> here (PDF).

💻 Code –> on GitHub.