Linguistic Dependencies and Statistical Dependence

At EMNLP (virtually) I presented work (with Wenyu Du, Alessandro Sordoni, and Timothy J. O’Donnell) titled Linguistic Dependencies and Statistical Dependence.

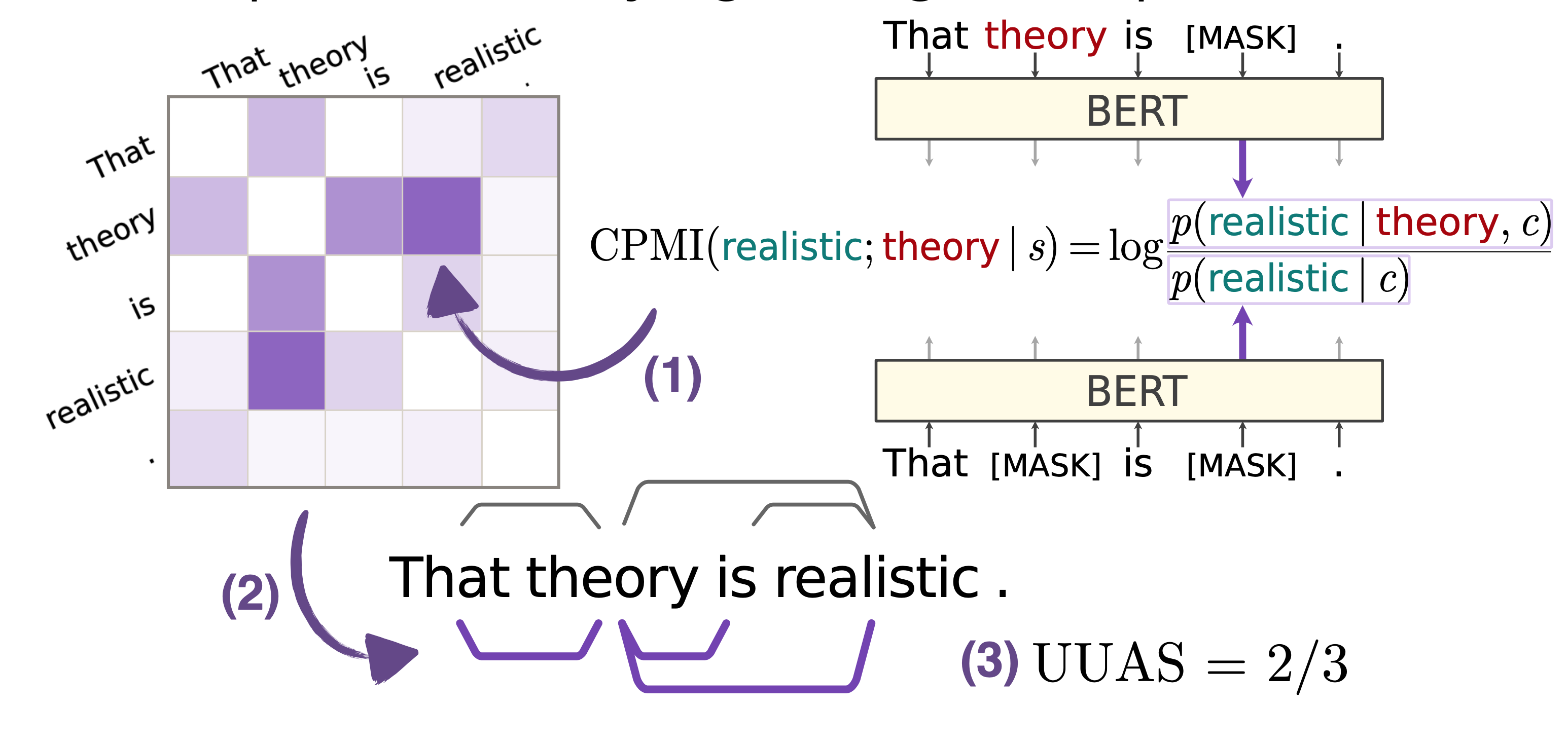

In this work, we compared linguistic dependency trees to dependency trees representing statistical dependence between words, which we extracted from mutual information estimates using pretrained language models. Computing accuracy scores we found that the accuracy of the extracted trees was only as high as a simple linear baseline that connects adjacent words, even with strong controls. We also found considerable differences between pretrained LMs.